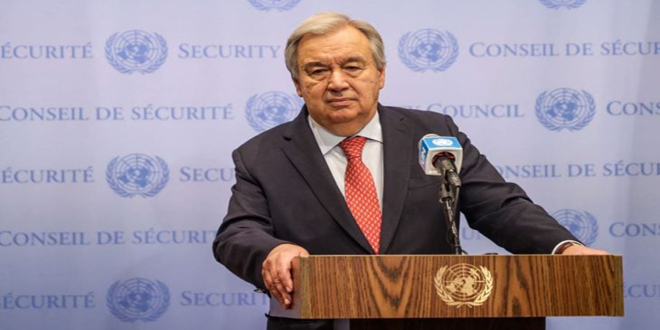

New York, SANA – The United Nations Secretary-General António Guterres said that he was deeply troubled by reports that the Israeli military’s bombing campaign includes Artificial Intelligence (AI) as a tool in the identification of targets, particularly in densely populated residential areas in the besieged Gaza Strip, resulting in a high level of civilian casualties.

No part of life and death decisions that impact entire families should be delegated to the cold calculation of algorithms, WAFA quoted Guterres as saying during a press encounter on Gaza.

“I have warned for many years of the dangers of weaponization of AI and reducing the essential role of human agency.”

AI should be used as a force for good to benefit the world; not to contribute to waging war on an industrial level, blurring accountability, added the UN Secretary-General.

“In its speed, scale and inhumane ferocity, the war in Gaza is the deadliest of conflicts – for civilians, for aid workers, for journalists, for health workers, and for our own colleagues,” he stated.

He said that some 196 humanitarian aid workers – including more than 175 members of our own UN staff – have been killed. “The vast majority were serving UNRWA, the backbone of all relief efforts in Gaza.”

Others include colleagues from the World Health Organization and the World Food Program – as well as humanitarians from Doctors without Borders, the Red Crescent, and just a few days ago World Central Kitchen, he added.

Guterres stressed that denying international journalists entry into Gaza is allowing disinformation and false narratives to flourish.

Regarding Aid delivery to the Strip, he said: “Dramatic humanitarian conditions require a quantum leap in the delivery of life-saving aid — a true paradigm shift.”

He repeated urgent appeals for an immediate humanitarian ceasefire, the protection of civilians, and the unimpeded delivery of humanitarian aid to Gaza.

Six months on, we are on the brink: of mass starvation; regional conflagration; and a total loss of faith in global standards and norms, he said.

“It’s time to step back from that brink – to silence the guns – to ease the horrible suffering — and to stop a potential famine before it is too late.”

The Israeli army has marked tens of thousands of Gazans as suspects for assassination, using an AI targeting system with little human oversight and a permissive policy for casualties, +972 and Local Call revealed.

A new investigation by +972 Magazine and Local Call revealed that the Israeli army has developed an artificial intelligence-based program known as “Lavender.”

Syrian Arab News Agency S A N A

Syrian Arab News Agency S A N A